Senior Editor

- FMA

- The Fabricator

- FABTECH

- Canadian Metalworking

Categories

- Additive Manufacturing

- Aluminum Welding

- Arc Welding

- Assembly and Joining

- Automation and Robotics

- Bending and Forming

- Consumables

- Cutting and Weld Prep

- Electric Vehicles

- En Español

- Finishing

- Hydroforming

- Laser Cutting

- Laser Welding

- Machining

- Manufacturing Software

- Materials Handling

- Metals/Materials

- Oxyfuel Cutting

- Plasma Cutting

- Power Tools

- Punching and Other Holemaking

- Roll Forming

- Safety

- Sawing

- Shearing

- Shop Management

- Testing and Measuring

- Tube and Pipe Fabrication

- Tube and Pipe Production

- Waterjet Cutting

Industry Directory

Webcasts

Podcasts

FAB 40

Advertise

Subscribe

Account Login

Search

How to make collaborative robots in manufacturing fab shops

Safety technology spurs the potential of human-robot interaction

- By Tim Heston

- Updated May 5, 2023

- April 27, 2021

- Article

- Automation and Robotics

Visit an advanced assembly line at a major OEM and you’ll likely see robots everywhere. They position, assemble, and move work down the line methodically and predictably, until a bird flies through a light curtain and the whole line stops.

“These are some of the world’s most sophisticated manufacturers, and they can’t get the birds out. Nature finds a way.”

So said Patrick Sobalvarro, president/CEO of Waltham, Mass.-based Veo Robotics, a company that has introduced a new way to safeguard robotics and automation in general. The idea behind Veo’s technology is to enable robots and humans to work together in new ways. The technology essentially makes any robot a collaborative one.

When those in automation circles talk of collaborative robots, or cobots, they almost always are referring to the small power- and force-limiting robots that operators can work with and around in close proximity. Their safety attributes—including the fact they can touch an obstruction and stop, and the fact that the robot’s joints have a little give to them—make them collaborative. Sure, if a cobot wields a collection of sharp workpieces all day long, it still presents a safety hazard. Regardless, their design allows operators and others to work near them, no light curtains or physical barriers required.

Drawbacks of Human-Robot Collaboration in Manufacturing

Of course, power- and force-limiting robots have their drawbacks. Most designs offer only limited payload capacity, and their positioning accuracy isn’t sufficient for many applications. For instance, integrating such robots on a press brake might entail some positioning aids, like a sidegauge or frontgauge in addition to a backgauge, which itself might be magnetized. The exact requirements depend on the part requirements, but the fact remains that typical power- and force-limiting robots offer neither the payload capacity nor the positioning accuracy of a conventional robot arm.

Still, far from being power- and force-limiting, conventional robots can be extraordinarily dangerous without the proper safeguarding. Even a slow-moving robot arm can crush an appendage caught in the wrong place at the wrong time.

Sobalvarro has been involved in the collaborative automation arena for years. As Rethink Robotics’ first president, he visited various OEMs and warehouses to get a feel of what industry was looking for. He found that power- and force-limiting robots had an extremely bright future for the right applications, but he also found that such robots had their limits.

“I toured the Spartanburg [South Carolina] BMW plant where I saw many ABB and Kuka robots. We walked down the production line with several manufacturing engineers along with others on the Rethink team. The engineers would show me a process step, like putting a door on a vehicle, and they’d ask, ‘Would you be able to do this with your robot?’ And I had to tell them no, the robot just wasn’t big enough, it didn’t have the reach, and wouldn’t be strong enough [to handle the payload].”

Problem was, those critical steps, all performed by conventional robots, were the constraint process of the entire line. And if they shut down from, say, a light curtain being broken by an employee in the wrong place (or by a flying bird), the entire line would stop. At the same time, he recognized the extraordinary benefit of collaborative robotics. Could conventional robots share the safety attributes of power- and force-limiting cousins and, in doing so, become collaborative?

Advances in Human-Robot Collaboration in Manufacturing

In 2008 Sobalvarro witnessed another technological advancement, working with a company that developed people-tracking software for the retail industry. “It tracked people in retail stores for loss prevention and provided what that sector calls ‘store intelligence.’ It can sense, for example, when someone hangs out by big-screen TVs and doesn’t receive customer service. Customers included the likes of Walmart and Best Buy. Seeing that, I knew it was possible to track people using computer vision, but I knew it wasn’t reliable enough at the time to be used as a safety solution.”

But it was at this point that Sobalvarro realized the potential. “We could make conventional robots safe for human interaction by monitoring the volume around them. Computers weren’t fast enough and 3D vision chip sets weren’t good or cheap enough, but I kept the idea in the back of my mind.”

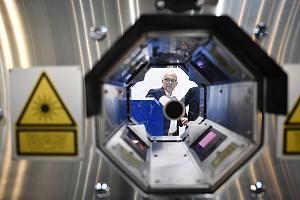

An assembler works safely near a robot that, with FreeMove, can effectively sense a human’s presence.

That idea planted the seed for Veo Robotics. Now a 5-year-old company, Veo has introduced FreeMove, an intelligent vision system that, for the right application, aims to eliminate the need for certain physical barriers, light curtains, or scanners, allowing people to work with, not away from, a conventional robot. Technology caught up with Sobalvarro’s idea, and earlier this year the company announced a partnership with its first integrator, Calvary Robotics in Greenville, S.C.

The system has been in use at significant OEMs. Besides saving space, it has helped automate certain tasks that before just weren’t practical. At one appliance-maker, for instance, a large robot lifts and presents a stainless steel refrigerator side panel to the operator, orienting it so the worker can add certain components in the right places. The act of assembling those components wasn’t suitable for robotics; the variables and process complexities were just too high. But the act of presenting that large, heavy sheet metal part was ripe for material handling robotics—but only if the company found a way for the operator to work closely and safely with the robot itself. In this case, FreeMove filled a need.

The technology, which is compliant with ISO 13849 (safety of machinery) and other safety standards (including certification for inclusion in an ISO 15066 collaborative workcell) , uses dual-channel communication among all sensors and the FreeMove engine itself.

“All these sensors give a 2.5D image,” Sobalvarro said. “The 2D comes from the area of view, while the half dimension gives the distance of what the sensors are seeing. The engine takes this information and fuses it into a single 3D view of the whole cell. And from that, the system knows many things. It can find the robot, and it knows the joint angles and other information obtained from the robot controller, checking to see if those angles are correct. It also knows the shape of the workpiece the robot is handling, obtained from the CAD drawing, so it knows it’s OK to pick those up and manipulate them. And it knows the static fixtures. Anything else that’s big enough is considered potentially to be a human.”

With the potential human detected, the system assumes that human could move all or part of his or her body at 2 m per second. Using that as a foundation, the system knows where the humans are and controls the robot to accommodate, allowing for stopping distances and other safety-related parameters.

“The compute engine itself has five CPUs, including two very powerful CPUs on each of the two motherboards,” Sobalvarro said, “plus a safety processor on whatever board [the system integrates with]. It’s comparing outputs for safety. The technology uses 3D images of the workcell and classifies objects within that workcell. And we don’t use machine learning, either, because that’s a probabilistic technology. Probability isn’t good enough for us.”

Because safety is at stake, “our default is, ‘stop everything,’” Sobalvarro explained. “If someone runs a forklift into the compute box, we stop everything. The absence of knowledge always means ‘stop.’”

Productivity and Safety for Collaborative Robots in Manufacturing

To ensure productivity, the Veo team has worked to increase that knowledge to account for myriad situations. In conventional situations, besides the workpiece, the robot, and other known entities within the cell, the system detects any unknown object larger than 15.6 liters in volume as a “human”—but not in every case. For instance, Sobalvarro described a situation where one robot cell had a worktable large enough for someone to hide under. In this case, if a vision system sees an object that shouldn’t be there adjacent to the table, it assumes it could be someone’s arm or head. It sees that object as a human who, again, could move at 2 m per second toward the robot. If the robot were to get too close to this “potentially human” object, it would shut down.

That said, if the system were to detect an unknown object not beside but on that table, it will recognize it for what it is: an object, like a thermos or cup, and not a human. Unlike a scanner or light curtain, the vision system won’t shut down just because someone left a soda can in its path.

Because the robot operates in an environment that could be full of humans, the stop-restart cycle is much different. Such collaborative automation wouldn’t be productive if every time a human got too close, a cell shutdown required a call to maintenance personnel for a lockout/tagout procedure. “For this reason, we automatically restart,” Sobalvarro said. “If the human sees the robot stop, he takes a step back, and the robot resumes. This leads to faster takt times and fewer line stops.”

The technology could make various areas of the fab shop, like the bending cell or hardware insertion cell, look a lot different. Because people could work in close proximity to the automation, they could carry certain workpieces through certain steps that fall outside the automation’s capability. Alternatively, a bending or insertion cell could be entirely automated for certain products, then switch to a manual cell for other products, with a quick transition between the two modes of operations.

Like any other technology, FreeMove does have several limitations. First, it has yet to be applied to any arc welding or laser application, and if it were, the light from those processes—if they weren’t shrouded in some way—would saturate the sensors. It wouldn’t create an unsafe situation, just force everything to stop.

It also is now limited to workcells up to about 64 sq. m. Sensors cannot cover massive workspaces, like those involved in aerospace, shipbuilding, or large equipment manufacturing. That said, such a capability might be on the horizon with what Veo calls Distributed FreeMove, where sensors placed throughout a factory could one day make safeguarding as ubiquitous as electricity or compressed air. “The idea is to have [safeguarding] generally available,” Sobalvarro said. “If you reconfigure the factory floor, it will remain an element of workcell setup and risk assessment, but it will always be there. It’s one uniform solution that’s broadly flexible and adaptable.”

As of now, the system works only in specific workcells. But if such technology eventually becomes distributed, the factory of the future could look very different.

About the Author

Tim Heston

2135 Point Blvd

Elgin, IL 60123

815-381-1314

Tim Heston, The Fabricator's senior editor, has covered the metal fabrication industry since 1998, starting his career at the American Welding Society's Welding Journal. Since then he has covered the full range of metal fabrication processes, from stamping, bending, and cutting to grinding and polishing. He joined The Fabricator's staff in October 2007.

subscribe now

The Fabricator is North America's leading magazine for the metal forming and fabricating industry. The magazine delivers the news, technical articles, and case histories that enable fabricators to do their jobs more efficiently. The Fabricator has served the industry since 1970.

start your free subscription- Stay connected from anywhere

Easily access valuable industry resources now with full access to the digital edition of The Fabricator.

Easily access valuable industry resources now with full access to the digital edition of The Welder.

Easily access valuable industry resources now with full access to the digital edition of The Tube and Pipe Journal.

- Podcasting

- Podcast:

- The Fabricator Podcast

- Published:

- 04/16/2024

- Running Time:

- 63:29

In this episode of The Fabricator Podcast, Caleb Chamberlain, co-founder and CEO of OSH Cut, discusses his company’s...

- Trending Articles

AI, machine learning, and the future of metal fabrication

Employee ownership: The best way to ensure engagement

Steel industry reacts to Nucor’s new weekly published HRC price

Dynamic Metal blossoms with each passing year

Metal fabrication management: A guide for new supervisors

- Industry Events

16th Annual Safety Conference

- April 30 - May 1, 2024

- Elgin,

Pipe and Tube Conference

- May 21 - 22, 2024

- Omaha, NE

World-Class Roll Forming Workshop

- June 5 - 6, 2024

- Louisville, KY

Advanced Laser Application Workshop

- June 25 - 27, 2024

- Novi, MI