Senior Editor

- FMA

- The Fabricator

- FABTECH

- Canadian Metalworking

Categories

- Additive Manufacturing

- Aluminum Welding

- Arc Welding

- Assembly and Joining

- Automation and Robotics

- Bending and Forming

- Consumables

- Cutting and Weld Prep

- Electric Vehicles

- En Español

- Finishing

- Hydroforming

- Laser Cutting

- Laser Welding

- Machining

- Manufacturing Software

- Materials Handling

- Metals/Materials

- Oxyfuel Cutting

- Plasma Cutting

- Power Tools

- Punching and Other Holemaking

- Roll Forming

- Safety

- Sawing

- Shearing

- Shop Management

- Testing and Measuring

- Tube and Pipe Fabrication

- Tube and Pipe Production

- Waterjet Cutting

Industry Directory

Webcasts

Podcasts

FAB 40

Advertise

Subscribe

Account Login

Search

Artificial intelligence makes machine maintenance more effective

By mimicking how a human ear hears, AI could be a valuable maintenance tool

- By Tim Heston

- February 7, 2018

- Article

- Testing and Measuring

Sebastien Christian didn’t expect he’d be working with companies that operate forging presses, but new technology is sometimes like that. Create something new for one industry, and another unexpectedly steps in and embraces it.

In 2015 the entrepreneur won the Best App Award at the Mobile World Congress show in Barcelona. His cloud-connected app essentially created an artificial intelligence (AI) engine that mimics how the human brain hears and interprets sounds.

“That first app would listen to sounds from the environment, like a knock at the door, the doorbell, or a fire alarm, and push a visual cue to your smartphone. Sebastien thought all the health care companies and hearing aid companies would come calling. But industrial companies reached out instead. They said, ‘We have engineers and maintenance techs who rely on sound as a leading indicator that shows something is going on.’”

So said Jags Kandasamy, chief product officer for OtoSense, a Palo Alto, Calif.-based company that has brought its AI hearing engine to the manufacturing industry. The technology has been adopted for CNC machining and press forging and, according to the company, has big potential for use in metal fabrication.

The technology uses various sensors as dictated by the application, such as accelerometers, microphones, optical sensors, and pressure sensors. These detect vibration, including sound waves, and then sends data to a cloud-based AI engine for interpretation.

As Christian, founder and CEO of OtoSense, explained, “Vibration is the source, and you capture or acquire it. Sometimes it’s measuring acceleration, like the motion of a solid itself. Or it propagates in air as a sound,” which can be measured by an area microphone.

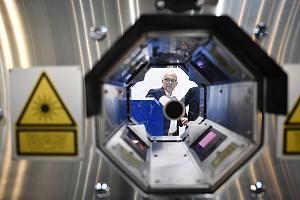

The noncontact microphone sensor also allows the technology to be used in harsh or sensitive environments. “In a CNC milling application, for example, we use a microphone in the work envelope, and it doesn’t touch anything. It’s great if we can have both [contact and noncontact] sensors working together, but using just a microphone, we can still have a good understanding of the machine.”

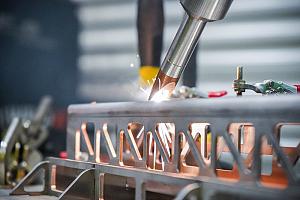

To explain how the technology is applied, Kandasamy described a current application for a very large, two-story forging press. “It is completely nonintrusive. We use both microphones and accelerometers for collecting the data. For this application, we are tracking three or four different areas in the press. One is where the oil flow happens, near the capillary tubes that send the oil in [to the system]. We have an accelerometer on the brake pad, and we have a microphone that is in the chamber where the forging happens.”

As with any AI, OtoSense’s needs to be taught. After all, what makes AI so powerful is that it can learn. To this end, technicians feed the system specific information so it can put the data it interprets in context.

Christian talked about a thermal cutting situation, such as plasma or laser cutting, in which the system emits a certain sound or vibration as it cuts specific geometries; for instance, for certain material the machine may emit one vibration signature when cutting straight lines, another sound when cutting circles, and another when traversing between cuts. “All these sounds will be held in context, and that context will be used to improve the AI’s recognition capabilities,” he said.

During the learning phase, the system has an interface that a technician can use. “This is for when the technician is telling the system what each sound means,” Kandasamy said. “But during the second phase, when you’re in real-time detection mode, everything happens at what we call our Enabled Edge device.” Used to collect the captured data, th Enabled Edge device can be a tablet, a laptop, or variety of other network-connected devices.

After a brief learning period, the system should run on its own. The information can be fed into a kind of ticketing system, alerting personnel when something’s amiss, and can be integrated into larger asset control and asset management systems, as well as manufacturing execution systems.When discussing potential applications in metal fabrication, sources talked about how various application attributes relate to vibration. This includes the heat of material, which could have potential benefits for measuring thermal cutting and welding processes. “The vibration will be affected by the heat of the material the vibration is going into,” Christian said. “So yes, the vibration will change [with temperature changes]. By how much exactly? This will be learned by the system.”

Also, because the system can use such a variety of sensors, it has the potential for being adapted to various situations. As Christian explained, imagine a situation in which a foreman with years of experience walks into a grinding or welding area, then hears a “whine” of a grinding wheel that sounds like the person grinding may be pushing too hard, using the wrong abrasive, or employing the wrong grinding technique.

Now imagine a microphone in the area, feeding the sound to an AI system, which then allows the company to correlate sound patterns with other variables. These could include grinding wheel usage, department throughput, or a host of other factors. Workers may never have been taught the right way to grind, or perhaps they are not being given the right tools for the job. Whatever the problem, that initial sound signature captured by the microphone could give fabricators an early indication that something’s amiss.

OtoSense, www.otosense.com

About the Author

Tim Heston

2135 Point Blvd

Elgin, IL 60123

815-381-1314

Tim Heston, The Fabricator's senior editor, has covered the metal fabrication industry since 1998, starting his career at the American Welding Society's Welding Journal. Since then he has covered the full range of metal fabrication processes, from stamping, bending, and cutting to grinding and polishing. He joined The Fabricator's staff in October 2007.

subscribe now

The Fabricator is North America's leading magazine for the metal forming and fabricating industry. The magazine delivers the news, technical articles, and case histories that enable fabricators to do their jobs more efficiently. The Fabricator has served the industry since 1970.

start your free subscription- Stay connected from anywhere

Easily access valuable industry resources now with full access to the digital edition of The Fabricator.

Easily access valuable industry resources now with full access to the digital edition of The Welder.

Easily access valuable industry resources now with full access to the digital edition of The Tube and Pipe Journal.

- Podcasting

- Podcast:

- The Fabricator Podcast

- Published:

- 04/30/2024

- Running Time:

- 53:00

Seth Feldman of Iowa-based Wertzbaugher Services joins The Fabricator Podcast to offer his take as a Gen Zer...

- Industry Events

Pipe and Tube Conference

- May 21 - 22, 2024

- Omaha, NE

World-Class Roll Forming Workshop

- June 5 - 6, 2024

- Louisville, KY

Advanced Laser Application Workshop

- June 25 - 27, 2024

- Novi, MI

Precision Press Brake Certificate Course

- July 31 - August 1, 2024

- Elgin,